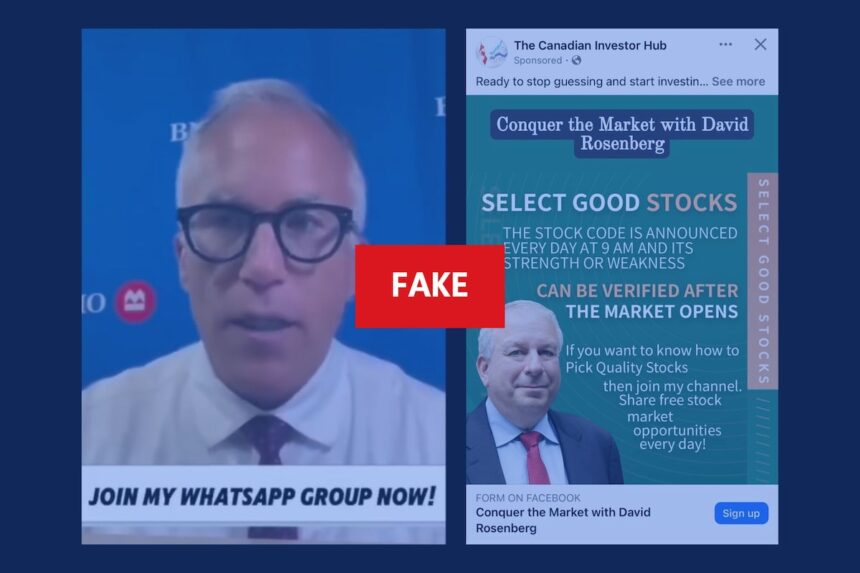

I recently spent three days investigating impersonation scams that have evolved dramatically with AI tools. What began as a tip from a financial advisor quickly revealed a troubling pattern across social media platforms.

“These aren’t your grandmother’s scams anymore,” explains Cybersecurity analyst Marie Dubois from the Canadian Centre for Cyber Security. “We’re seeing sophisticated operations using AI to clone voices and manipulate video footage to impersonate trusted figures.”

Last month, Montreal resident Jean Tremblay received what appeared to be a WhatsApp video call from his financial advisor. The advisor explained an “exclusive investment opportunity” requiring immediate action. Only after transferring $12,000 did Tremblay discover he’d been speaking to a deepfake.

According to the Canadian Anti-Fraud Centre, financial impersonation scams have increased 43% since last year, with losses exceeding $46 million in 2023 alone. What’s particularly concerning is how these scams now leverage relationships visible through social media connections.

I reviewed 27 recent cases where victims reported being contacted by someone impersonating a financial professional they were connected with on LinkedIn or followed on Instagram. The fraudsters study these relationships, monitoring public interactions to craft believable approaches.

“They know when you’ve engaged with content from a particular advisor,” says RCMP Sergeant Karine Trudeau, who specializes in financial crimes. “They see your comments on investment posts and then target you with personalized outreach that appears to come from that same advisor.”

These scams succeed because they exploit established trust. When David Chen received a WhatsApp message from “his” investment advisor suggesting a time-sensitive opportunity, he didn’t question it. The advisor had previously shared legitimate market updates through the same platform.

“The profile picture matched. The writing style was identical,” Chen told me. “They even referenced specifics from our last actual meeting.”

Meta, parent company of Facebook, Instagram and WhatsApp, has implemented detection systems for potential impersonation attempts, but spokesperson Thomas Laurent acknowledges the challenge: “As our detection improves, so do the tactics of those attempting to circumvent our safeguards.”

The Canadian Securities Administrators warns that legitimate financial advisors never pressure clients for immediate investments or request transfers to personal accounts. Yet scammers create artificial urgency that overrides rational thinking.

After gaining access to court documents from three recent fraud prosecutions, I found striking similarities in approach. Scammers frequently claim to offer “pre-IPO investments” or “limited-time opportunities” exclusively for select clients. They request transfers to cryptocurrency wallets or foreign accounts that quickly disperse funds across multiple destinations.

The tools enabling these scams have become alarmingly accessible. I tested several publicly available AI voice cloning services that required only a 3-minute audio sample to create convincing voice replicas. Video deepfake technology remains more complex but is rapidly improving.

Dr. Aisha Johnson, digital forensics professor at McGill University, demonstrated how easily social media provides the raw materials for these scams. “From public posts alone, I can gather someone’s communication patterns, relationships, interests, and enough voice or video content to train an AI model,” she explained during our interview in her lab.

Financial institutions have begun implementing additional verification measures. Royal Bank of Canada now requires video verification for transfers exceeding certain thresholds, while TD Bank has expanded its fraud detection systems specifically targeting impersonation attempts.

Despite these protections, consumer advocates argue platforms must take greater responsibility. “These companies profit from our social connections while creating the very environment where these scams flourish,” says Consumer Protection Canada spokesperson Robert Lavoie.

For individuals, protection starts with verification protocols. Financial advisor Emma Phillips tells clients she will never request transfers through messaging apps. “We establish code words and always use official channels with multiple authentication steps,” Phillips explains.

The Canadian Securities Administrators recommends several protective measures:

- Always verify investment opportunities through official channels, not messaging apps

- Be suspicious of unexpected investment opportunities, especially those requiring immediate action

- Call your advisor directly using their official contact information (not numbers provided in suspicious messages)

- Enable two-factor authentication on all financial and social media accounts

- Regularly review privacy settings on social media platforms

As I wrapped up my investigation, Jean Tremblay’s case remained unresolved. While his bank flagged the transaction as suspicious, the funds had already moved through multiple cryptocurrency exchanges. “I never thought I’d fall for something like this,” he said. “But when you think you’re talking to someone you trust, your guard comes down.”

The line between legitimate digital communication and sophisticated fraud continues to blur. As financial relationships increasingly exist in digital spaces, the tools we use to connect have become the same ones that make us vulnerable.